Tech PR & Digital Marketing

The award-winning full-service PR and Digital Marketing agency for tech companies in Africa – with a proven track record of results.

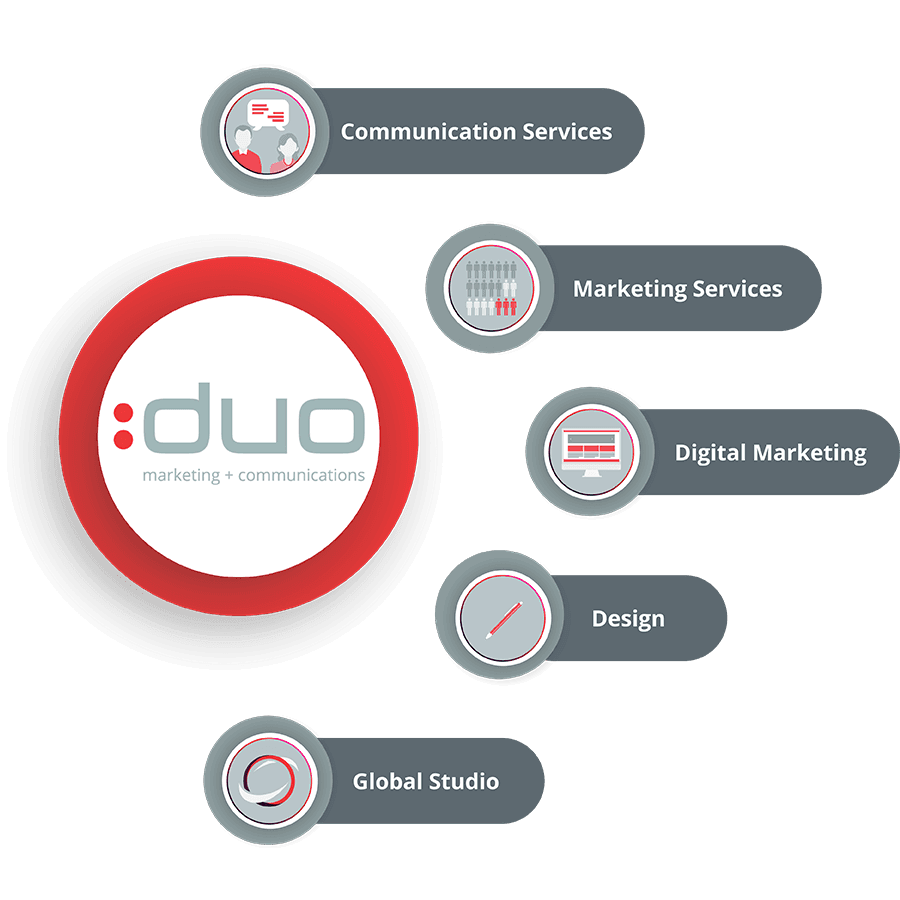

Communication Services

- Crisis Communication

- Strategy

- Media Training

- Media Events

- Content Creation

- Investor Relations

- Internal Communications

- Personal Profiling

Marketing Services

- Messaging & Branding

- Research

- Visual Launguage

- White Paper Development

- Advertising

- Media Buying

Digital Marketing

- SEO

- Lead Generation

- PPC

- Social Media Advertising

- Social Media Strategy

- Website Development

- Video Production

- Email Marketing

- Webinars

- Podcasts

- Blog Content Writing

- Website Maintenance

Design

- Branding & Identity

- Video Production

- Website/Webpage Design

- Custom Newsletters & Templates

- Graphic Design

Global Studio

- Written Content

- Creative Design Content

- Digital Marketing Campaigns

- Content Analytics

PR, Communications & Marketing Services

Communications

Our team of Tech PR professionals, focus on strategy, public relations, media relations, media training for our clients in the technology sector.

Marketing Services

We specialise in media buying, visual language, research, white paper and case study development, messaging and branding, video production and advertising.

Digital Marketing Services

Let us help you grow your brand’s online presence and drive business growth with website development, social media marketing, lead generation campaigns, website maintenance, PPC, podcasts, webinars, SEO and more.

Design

Design is essential in tech marketing, making complex ideas accessible, enhancing user experience, and strengthening brand identity. It creates a strong first impression, clarifies information with visuals, demystifying complex ideas and driving engagement across platforms.

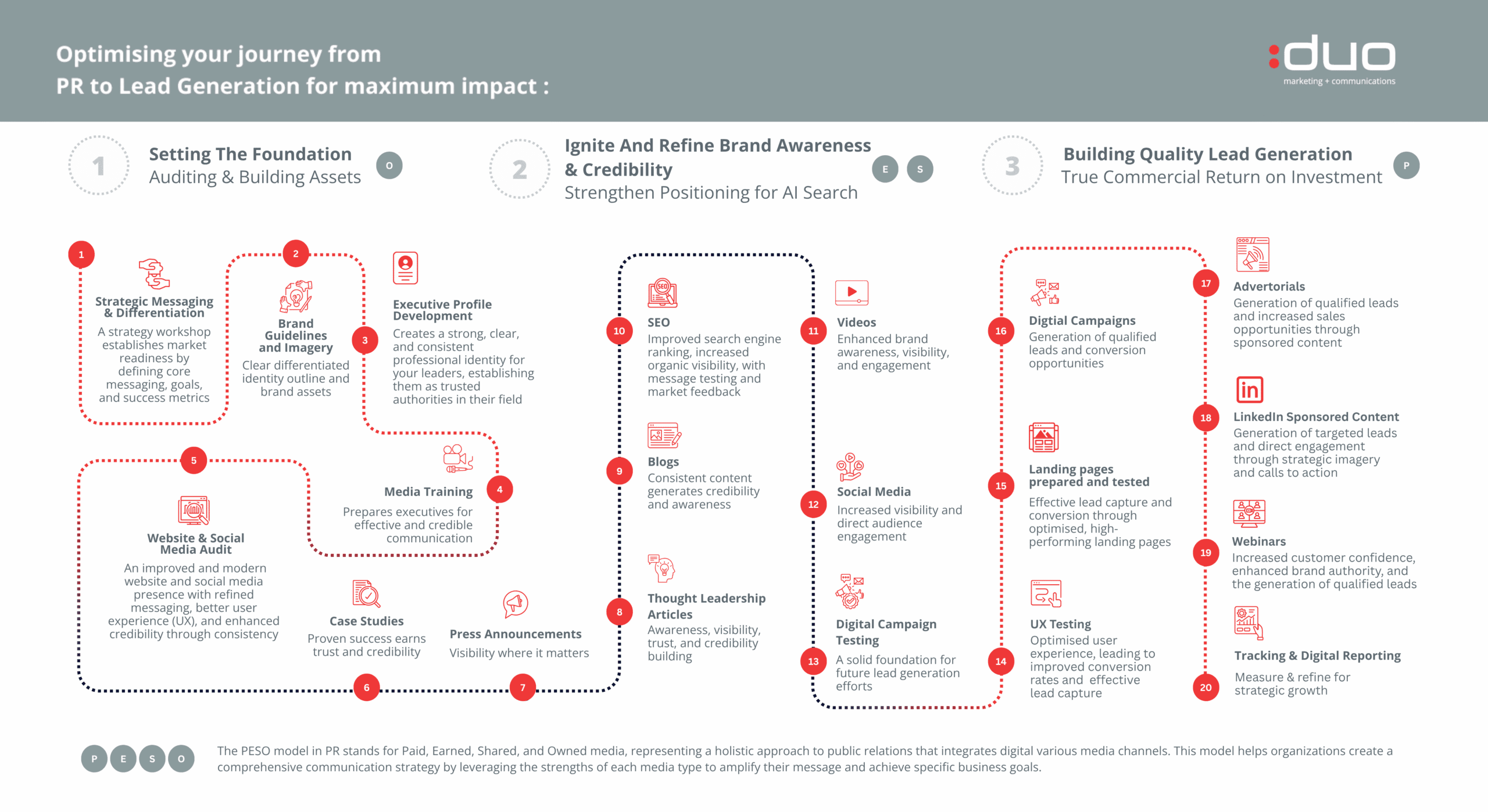

Our Strategic Approach

Navigating Change, Driving Growth: The Dragonfly Effect

Like the dragonfly, DUO Marketing + Communications embodies agility, transformation, and a powerful sense of protection.

We navigate the dynamic landscape of marketing and communications with clear vision

and strategic precision, adapting swiftly to industry shifts and acting as a shield for our

clients’ brands. Just as the dragonfly guards its territory, DUO safeguards your reputation and ensures your message resonates authentically.

Partnering with DUO

A global award-winning Tech public relations, communications, and digital marketing agency with 21 years of specialist Tech experience.

Experienced strategists and account managers to deliver results that match your business objectives.

Integration and amplification of PR with Digital including social media, media buying of advertorial and advertising placement, lead generation campaigns, influencer campaigns and more.

Full spectrum of PR services including crisis management for tech brands, and Investor relations for listed companies.

Award-winning Tech journalists to translate your technology value into business and commercial value.

Content writers, designers, SEO experts (using AI for optimum results) and pay per-click (PPC) management to elevate your brand’s visibility online.

Monthly reports to match real business objectives, and full accountability of your investment and brand elevation needs.

Results In Action: Case Studies

Be inspired by the successes we have helped our tech clients achieve.

Beyond the target: How DUO elevated Braintree’s brand and drove massive awareness

20 Years of Partnership: How DUO and Vox Built a Lasting Legacy of PR Success

How PR and Digital Marketing helped position Braintree as a Microsoft Dynamics leader in Africa

Evidence-based, strategic PR and marketing for Telviva helps build blueprint for future of communications

DUO uses digital marketing and quality thought-leadership to strengthen Telviva’s brand

Integrated digital marketing and PR campaign builds targeted brand awareness for Braintree

Ecentric: PR is the foundation for real return on investment through lead generation

Point of impact: Your vision, our experience and expertise

Tech is our playground, whether B2B or B2C. For over two decades, we’ve been turning technical jargon into narratives that engage, educate, and inspire action. Our track record as a tech PR and digital marketing agency speaks for itself – we do not simply create content; we create conversations that translate to leads, revenue, and brand loyalty

is to be the complete marketing and communications partner, facilitating growth and enabling greatness for tech companies by harvesting business value out of technology. We believe a holistic strategy reaps the best results and return on investment.

Our PR and digital marketing agency is headquartered in Cape Town and recognised as a leading Tech PR and digital marketing agency in Africa, leveraging unique partnerships to drive client success across Africa and internationally.

a voice for over 20 years

Best Tech PR and Digital Marketing Agency, 2021. African Excellence Award

Best Tech Focused PR and Digital Marketing Agency in Africa, 2021 & 2022, Media Innovator Awards

Best B2B Technology Communications Agency Africa, 2021, MEA Business Awards

Best Tech PR and Digital Marketing Agency, 2022. Global Business Insights Awards

Best Full-Service Tech-Focused PR & Marketing Agency 2024 – Sub-Saharan Africa

Best Tech PR and Digital Marketing Agency, 2024. Global Business Insights Awards

Meet Our Team

Meet the people at the heart of DUO

Client Testimonials